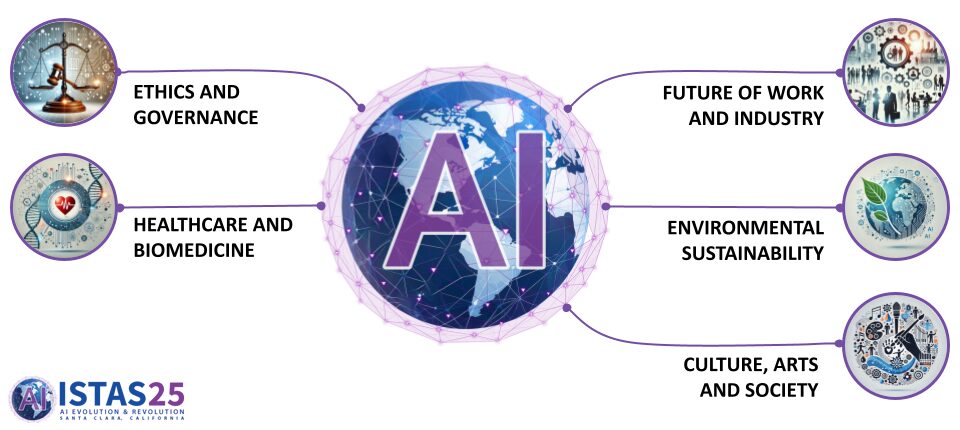

Thematic Areas of Interest

What is the conference about?

ISTAS25 focuses on the evolving role of artificial intelligence across sectors, systems, and everyday life. The conference brings together researchers, practitioners, thought leaders, decision-makers, and policymakers to examine how AI is shaping institutions, values, and futures.

The goal is not just to explore what AI can do, but to ask what it should do, and how it can be designed in ways that are responsible, inclusive, and grounded in human needs.

Artificial intelligence is reshaping nearly every aspect of life, from how we work and care for one another, to how we create, govern, and respond to the world around us.

The suggested themes reflect some of the most urgent and complex questions being asked today. They don’t offer final answers. Instead, they open space for critical discussion, new perspectives, and shared insight, the kind of thinking ISTAS25 is built to encourage.

Navigating the Ethics of AI: Building Trust, Transparency, and Accountability

Navigating the Ethics of AI: Building Trust, Transparency, and Accountability

From policing and healthcare to education and finance, AI is becoming part of our daily lives. As its presence grows, so does the urgency of governing it ethically. The Ethics and Governance track at ISTAS25 explores the frameworks, policies, and oversight needed to ensure that AI technologies serve humanity responsibly and equitably.

At the heart of this conversation are the ethical principles guiding AI’s development and deployment. While many companies and institutions have adopted AI ethics guidelines, a universal framework that is enforceable across borders and disciplines remains elusive. This absence raises essential questions about fairness, bias, and power in the digital age.

The policy landscape is rapidly evolving. Governments and international bodies are working to regulate AI systems while attempting to balance innovation with the need to protect civil liberties. From the EU AI Act to executive orders in the United States, regulatory initiatives vary in scope and enforceability. These efforts highlight the complexity of aligning legal frameworks across jurisdictions.

The use of AI by law enforcement is one of the most visible areas where this tension plays out. Predictive policing tools, facial recognition technologies, and surveillance systems raise concerns about discrimination, accountability, and oversight. These applications demand not only technical scrutiny but also robust ethical and legal review.

Transparency and explainability in AI systems remain essential for building public trust. As complex models are deployed in sensitive settings, there is a growing demand for tools that help users and regulators understand how decisions are made.

Privacy also remains a central concern in the ethical use of AI. The collection, use, and potential misuse of personal data continue to spark debates about consent, digital autonomy, and surveillance. Designing systems that protect individual rights without limiting innovation is a key challenge.

Ultimately, responsible AI development requires careful attention to oversight, accountability, and governance. Innovation must be supported by structures that ensure technology aligns with public values. The societal stakes involved are too significant to ignore.

Do you have thoughts on oversight, transparency, or the ethical use of AI? Share your perspective and join the conversation.

When you’re ready, submit a paper here.

AI in Healthcare: Unlocking Potential, Confronting Ethical Challenges

AI in Healthcare: Unlocking Potential, Confronting Ethical Challenges

Artificial intelligence is transforming healthcare at every level. From faster diagnostics to tailored treatment plans, AI is already reshaping how medical professionals deliver care and how patients experience it. The Healthcare and Biomedicine track at ISTAS25 explores the opportunities, risks, and responsibilities that come with the rapid integration of AI into health systems.

AI-driven diagnostic tools are among the most visible innovations in this space. By analyzing complex medical data such as imaging, lab results, and patient records, AI can assist clinicians in identifying conditions more quickly and accurately. These tools are already improving outcomes like cancer detection, cardiovascular monitoring, and radiology.

Personalized medicine represents another frontier. With the help of machine learning, physicians can design treatment plans based on individual genetic profiles, lifestyle patterns, and health histories. This tailored approach promises more effective therapies and better patient engagement.

The role of AI in drug discovery is also gaining momentum. Algorithms are being used to identify promising compounds, simulate their effects, and accelerate the early phases of development. These methods can shorten timelines and reduce the costs of bringing new treatments to market.

However, the benefits of AI in healthcare come with significant ethical considerations. Decision-making algorithms must be transparent, accountable, and fair. In high-stakes environments like emergency care or long-term treatment, it is essential to understand how AI systems arrive at their recommendations and whether they reinforce existing biases.

The integration of AI into caregiving also raises new questions. What role should AI play in monitoring, companionship, or decision-making in eldercare or mental health support? These technologies are designed to assist, but they must respect human dignity and autonomy.

While these tools support individual patients, they also play a role in addressing broader public health challenges. From predicting the spread of infectious diseases to optimizing resource allocation in underserved regions, these applications hold enormous potential. However, they must be guided by inclusive data practices and equitable access.

If you’re working on or thinking about AI in medicine, care, or public health, share your work with the ISTAS25 community.

When you’re ready, submit a paper here.

AI and the Future of Work: Redefining Roles, Skills, and Responsibility

AI and the Future of Work: Redefining Roles, Skills, and Responsibility

Artificial intelligence is reshaping the world of work. From how decisions are made to who makes them, AI is changing industries, job markets, and workplace dynamics in real time. The Future of Work and Industry track at ISTAS25 invites reflection on what these changes mean for employment, leadership, legal frameworks, and the broader economy.

A central question is who or what is in charge. As AI systems become more capable of performing complex tasks, organizations must determine how much authority to delegate to machines. This shift raises concerns about accountability, transparency, and the role of human judgment in decision-making processes.

The impact on job markets is significant. Some roles are being automated, while others are evolving to require new skills in data analysis, system management, and human-machine collaboration. Rather than eliminating work, AI is transforming it, which makes reskilling and lifelong learning critical for an inclusive transition.

AI also influences how people are hired and managed. In recruitment and human resources, algorithms are used to screen applications, assess performance, and guide promotions. These systems promise efficiency but also present risks related to bias, fairness, and data privacy.

Governance is another primary concern. As AI becomes integrated into business operations, legal and ethical frameworks must evolve in tandem. Employers, regulators, and developers must work together to ensure that AI is used responsibly, particularly when it affects people’s livelihoods and rights.

At the economic level, AI adoption introduces questions about value creation, productivity, and inequality. For example, logistics firms use AI to improve delivery efficiency, while others are rethinking workforce roles entirely. Addressing these shifts requires coordination across industry, education, and public policy.

Infrastructure is also part of the conversation. AI technologies depend on data centers, connectivity, and digital tools. These requirements will shape how businesses operate and how cities and countries invest in their future economies.

Are you exploring how AI is changing jobs, leadership, or labor systems? Contribute your insights to this year’s dialogue.

When you’re ready, submit a paper here.

AI for Environmental Sustainability: Innovation with Responsibility

AI for Environmental Sustainability: Innovation with Responsibility

Artificial intelligence is playing a growing role in addressing environmental challenges. From monitoring ecosystems to optimizing renewable energy, AI offers new tools to support climate action and sustainability efforts. The Environmental Sustainability track at ISTAS25 explores how these technologies can be applied responsibly and effectively to benefit the planet and society.

One of the most promising areas is climate change modeling. AI systems can process large volumes of environmental data to predict weather patterns, simulate climate scenarios, and identify potential risks. These insights help inform policy, disaster preparedness, and long-term planning.

Smart cities are also using AI to manage resources more efficiently. Applications include optimizing traffic flow, monitoring air quality, and coordinating energy use in real time. These innovations contribute to more sustainable urban living and a better quality of life.

In the energy sector, AI is being used to improve the performance of renewable systems. Algorithms can forecast solar and wind energy production, balance supply and demand, and detect faults in infrastructure. These improvements help make clean energy more reliable and scalable.

AI also supports conservation efforts. By analyzing satellite imagery and sensor data, it is possible to detect deforestation, track wildlife, and monitor ecosystems. These tools offer faster and more accurate insights for researchers and environmental organizations.

However, ethical questions exist about how AI is used in environmental decision-making. Transparency, fairness, and data accessibility must be considered to ensure that environmental technologies do not reproduce existing inequities or exclude vulnerable communities.

Another area of concern is energy consumption. Training large AI models requires significant computational power, affecting electricity use and emissions. Sustainable AI design practices are essential to reduce the environmental footprint of these technologies.

If your work intersects with AI and climate, energy, or conservation, share a proposal and join the discussion.

When you’re ready, submit a paper here.

AI and Society: Shaping Culture, Creativity, and Public Trust

AI and Society: Shaping Culture, Creativity, and Public Trust

Artificial intelligence is not only transforming industries and infrastructures, it is also reshaping the fabric of society. The Culture, Arts, and Society track at ISTAS25 explores how AI influences relationships, creativity, communication, and public discourse. These changes affect how people learn, connect, and understand the world around them.

One area of growing interest is the impact of AI on human interactions. Social robots, chatbots, and digital assistants are becoming more integrated into daily life. This shift raises questions about how relationships, empathy, and communication evolve when people interact with machines.

Education is also being influenced by AI. Personalized learning platforms, automated tutoring systems, and adaptive assessments are designed to support students in new ways. At the same time, educators are facing challenges around algorithmic bias, access, and the role of teachers in AI-supported classrooms.

Creativity is undergoing a transformation. AI-generated art, music, and literature are sparking debate about authorship, originality, and artistic value. While these tools offer exciting new possibilities, they also challenge traditional ideas about human expression and intellectual property.

AI is playing a role in shaping democratic systems and public discourse. From content curation to political messaging, algorithms affect how information is distributed and consumed. This influence creates both opportunities and risks for civic engagement, freedom of expression, and informed decision-making.

Cultural perceptions of AI vary globally. Attitudes toward automation, data sharing, and digital agency are shaped by values, history, and social norms. Understanding these differences is essential for developing inclusive and effective AI systems.

Misinformation is another growing concern. Generative AI tools can produce convincing but false content, which may affect public trust and social cohesion. Platforms and institutions must consider how to address these risks without limiting access to creative or educational tools.

Social media remains a powerful space for both innovation and disruption. AI is used to personalize content, moderate posts, and influence engagement. These tools can foster connection, but they also contribute to echo chambers and online polarization.

Are you interested in how AI is shaping creativity, connection, or public discourse? Share your perspective with others exploring the same questions.

When you’re ready, submit a paper here.